What are small language models and how do they work?

- What are small language models?

- Where did small language models come from?

- How do small language models work, and how are they used today?

- Do small language models matter?

- TL;DR

What are small language models?

Small language models (SLMs) are compact AI systems designed to perform language-related tasks while using far less computational power than large models like GPT-4 or Claude. Because they require fewer resources, SLMs can run directly on personal devices or with minimal server support, without sacrificing their ability to handle targeted tasks effectively.

By operating within smaller memory and processing limits, SLMs make advanced AI capabilities more accessible. They’re more affordable than large-scale models, which often depend on costly cloud infrastructure.

Several factors are driving the current surge in interest:

- Privacy: SLMs can run on a local device, keeping sensitive data from leaving your system

- Cost: They avoid expensive hardware requirements and ongoing cloud hosting fees

- Environmental impact: Their smaller size means lower energy usage and a reduced carbon footprint

Because SLMs can function without constant internet access, they’re well-suited for situations where bandwidth is limited, privacy rules are strict, or infrastructure is constrained. They offer a balanced approach: capable enough for meaningful work while avoiding the heavy overhead of large AI systems.

Where did small language models come from?

SLMs emerged as a direct response to the rapid scaling of flagship AI models. As model sizes ballooned into the hundreds of billions of parameters, the infrastructure needed to train and run them became increasingly impractical for many use cases. This pushed researchers toward building smaller, more efficient alternatives that could still perform well in specialized contexts.

Notable contributors to this shift include:

- Google: With models like Gemma and Phi-3

- Meta: With the LLaMA family

- Academic and open-source communities: Working on distilling large models into smaller, deployable versions

Many are purpose-built for efficiency, fine-tuned for narrow applications, and optimized for environments where performance and resource use both matter.

How do small language models work, and how are they used today?

While they share the same underlying neural network architecture as their larger counterparts, SLMs rely on targeted optimizations to keep their size and computational needs low. Common techniques include:

- Knowledge distillation: Training a smaller model to mimic a larger one’s outputs

- Quantization: Lowering the precision of calculations to save space and speed up processing

- Pruning: Removing less important network connections to reduce complexity

Rather than aiming for broad, general-purpose performance, most SLMs are fine-tuned for specific domains. This makes them highly effective in environments where privacy, speed, or offline operation is a priority—such as on-device virtual assistants, embedded systems, edge computing, or specialized enterprise tools.

Do small language models matter?

SLMs broaden access to advanced language capabilities by removing the dependency on massive infrastructure and high recurring costs. This allows organizations of all sizes to integrate AI into their workflows.

This local-first approach means teams can work with AI directly where the data lives, cutting out delays and dependency on third-party services.

TL;DR

Small language models offer a way to deploy AI that’s resource-efficient, private, and adaptable. They’re particularly valuable when you need offline functionality, strict data control, or cost-effective performance.

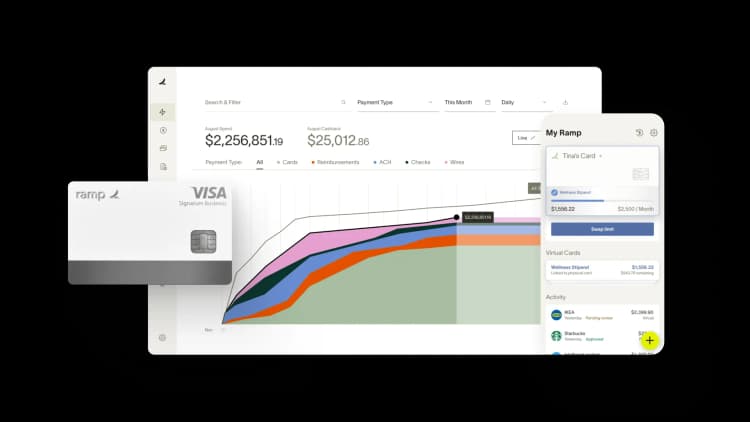

“In the public sector, every hour and every dollar belongs to the taxpayer. We can't afford to waste either. Ramp ensures we don't.”

Carly Ching

Finance Specialist, City of Ketchum

“Ramp gives us one structured intake, one set of guardrails, and clean data end‑to‑end— that’s how we save 20 hours/month and buy back days at close.”

David Eckstein

CFO, Vanta

“Ramp is the only vendor that can service all of our employees across the globe in one unified system. They handle multiple currencies seamlessly, integrate with all of our accounting systems, and thanks to their customizable card and policy controls, we're compliant worldwide. ”

Brandon Zell

Chief Accounting Officer, Notion

“When our teams need something, they usually need it right away. The more time we can save doing all those tedious tasks, the more time we can dedicate to supporting our student-athletes.”

Sarah Harris

Secretary, The University of Tennessee Athletics Foundation, Inc.

“Ramp had everything we were looking for, and even things we weren't looking for. The policy aspects, that's something I never even dreamed of that a purchasing card program could handle.”

Doug Volesky

Director of Finance, City of Mount Vernon

“Switching from Brex to Ramp wasn't just a platform swap—it was a strategic upgrade that aligned with our mission to be agile, efficient, and financially savvy.”

Lily Liu

CEO, Piñata

“With Ramp, everything lives in one place. You can click into a vendor and see every transaction, invoice, and contract. That didn't exist in Zip. It's made approvals much faster because decision-makers aren't chasing down information—they have it all at their fingertips.”

Ryan Williams

Manager, Contract and Vendor Management, Advisor360°

“The ability to create flexible parameters, such as allowing bookings up to 25% above market rate, has been really good for us. Plus, having all the information within the same platform is really valuable.”

Caroline Hill

Assistant Controller, Sana Benefits