What is vLLM? How it works and where it came from

- What is vLLM?

- Where did vLLM come from?

- How does vLLM work, and how is it typically used today?

- Does vLLM matter?

- TL;DR

- The role of scalable AI infrastructure in modern automation

- AI agent for finance automation

What is vLLM?

vLLM (Virtual Large Language Model) is an open-source library designed to make large language model (LLM) inference faster and more efficient. It does this through advanced memory and processing optimizations, most notably its PagedAttention mechanism, which improves GPU memory management and speeds up attention calculations.

This combination of higher throughput and lower infrastructure demand makes vLLM relevant for teams running AI at scale. It offers two clear operational advantages:

- Higher request handling capacity on the same hardware

- Lower GPU usage and overall serving costs

Where did vLLM come from?

vLLM originated at UC Berkeley's Sky Computing Lab. The project was introduced in a 2023 research paper proposing a new approach to managing memory for LLM inference.

Initially, vLLM was a research tool aimed at improving how attention layers handled memory allocation. The open-source release on GitHub quickly drew interest from both academic researchers and engineering teams in industry. Its capabilities have expanded significantly since then:

- Transitioned from a memory optimization research tool into a full production-grade serving system

- Added support for continuous batching, quantization, and distributed inference

- Improved compatibility with popular model deployment frameworks

This progression mirrors broader industry needs—applications using LLMs have grown more complex, and infrastructure must keep pace without runaway cost increases.

How does vLLM work, and how is it typically used today?

In production environments, vLLM serves as a high-performance model serving layer. It integrates with frameworks that allow teams to adopt it without redesigning existing deployment pipelines.

Its main purpose is to deliver low-latency, cost-effective LLM serving. This is particularly valuable for systems requiring real-time language processing, such as chatbots, virtual assistants, content generation tools, and internal AI-powered productivity applications.

The key to vLLM’s performance is PagedAttention, which adapts memory paging concepts from operating systems to the specific demands of LLM attention layers. By doing so, it reduces the memory footprint and speeds up calculations in one of the most resource-intensive parts of inference.

In addition, vLLM employs continuous batching. Rather than waiting for a full batch to be assembled before processing, it dynamically batches incoming requests, keeping GPU utilization high without introducing unnecessary delays.

Does vLLM matter?

For organizations deploying large language models, performance and cost are constant constraints. vLLM helps address both:

- Supports more concurrent users with fewer GPUs

- Enables inference cost reductions of 50–70% compared to some traditional serving setups

These efficiencies can shift AI from being an experimental capability to an integral part of core products, without unsustainable infrastructure spend. They also give teams more flexibility to deploy larger or more advanced models while maintaining service-level goals.

The impact extends beyond hardware utilization. By making serving more efficient, vLLM enables real-time applications that were previously too slow or costly to justify—such as live content recommendations, interactive tutoring systems, and adaptive user interfaces.

TL;DR

vLLM is a specialized framework that speeds up large language model inference and reduces serving costs through advanced memory management and dynamic batching. It helps teams deploy AI features that are both responsive and cost-effective, turning technical limitations into solvable optimization challenges.

The role of scalable AI infrastructure in modern automation

vLLM has gained attention for making large language models serving significantly faster and more resource-efficient, which directly impacts how quickly teams can experiment, deploy, and iterate with AI. While its primary use case is in AI research and application development, the underlying concept—building infrastructure that can handle large volumes of requests without compromising on speed or cost—has broad relevance.

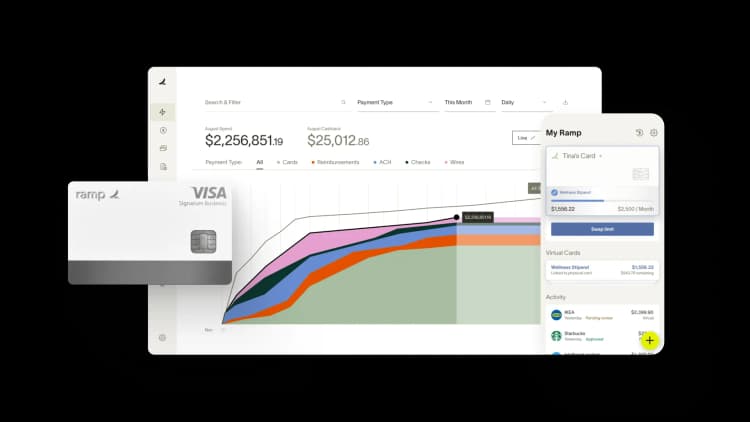

At Ramp, the same principle shapes how we design AI-powered financial automation: scalable systems that deliver fast, accurate results for finance workflows, whether that’s processing invoices, reconciling transactions, or surfacing real-time spend insights.

AI agent for finance automation

Ramp recently introduced its first AI agent to handle the routine, repetitive tasks that consume finance teams’ time each month. Take a $5 latte: uploading the receipt, reviewing the charge, and coding the expense in NetSuite can add up to 14 minutes and more than $20 in labor for a single transaction. Multiply that by thousands of expenses and the cost is significant.

By automating these small but frequent tasks, the AI agent frees teams to focus on higher-value work and decision-making.

Explore how Ramp’s AI agents fits into your finance processes and where it could remove the most friction. Learn more about Ramp Agents.

“In the public sector, every hour and every dollar belongs to the taxpayer. We can't afford to waste either. Ramp ensures we don't.”

Carly Ching

Finance Specialist, City of Ketchum

“Ramp gives us one structured intake, one set of guardrails, and clean data end‑to‑end— that’s how we save 20 hours/month and buy back days at close.”

David Eckstein

CFO, Vanta

“Ramp is the only vendor that can service all of our employees across the globe in one unified system. They handle multiple currencies seamlessly, integrate with all of our accounting systems, and thanks to their customizable card and policy controls, we're compliant worldwide. ”

Brandon Zell

Chief Accounting Officer, Notion

“When our teams need something, they usually need it right away. The more time we can save doing all those tedious tasks, the more time we can dedicate to supporting our student-athletes.”

Sarah Harris

Secretary, The University of Tennessee Athletics Foundation, Inc.

“Ramp had everything we were looking for, and even things we weren't looking for. The policy aspects, that's something I never even dreamed of that a purchasing card program could handle.”

Doug Volesky

Director of Finance, City of Mount Vernon

“Switching from Brex to Ramp wasn't just a platform swap—it was a strategic upgrade that aligned with our mission to be agile, efficient, and financially savvy.”

Lily Liu

CEO, Piñata

“With Ramp, everything lives in one place. You can click into a vendor and see every transaction, invoice, and contract. That didn't exist in Zip. It's made approvals much faster because decision-makers aren't chasing down information—they have it all at their fingertips.”

Ryan Williams

Manager, Contract and Vendor Management, Advisor360°

“The ability to create flexible parameters, such as allowing bookings up to 25% above market rate, has been really good for us. Plus, having all the information within the same platform is really valuable.”

Caroline Hill

Assistant Controller, Sana Benefits