- Focus on outcomes, not interfaces

- Separate general knowledge and private data

- Explainability isn’t enough—offer users control

- Build guardrails, not censorship

- Creating better AI

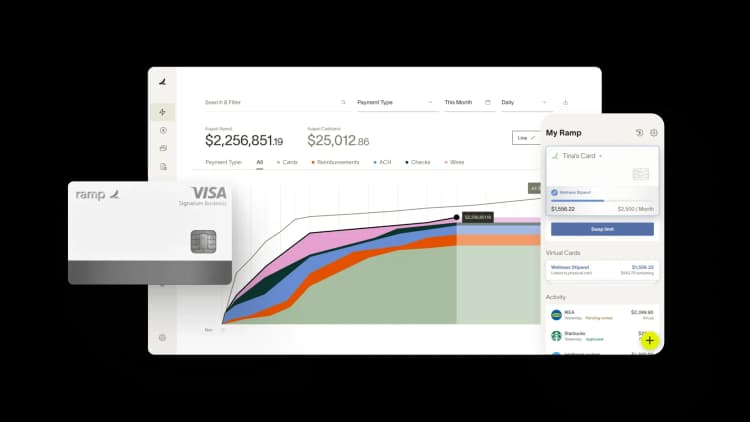

Artificial intelligence is transforming how we work and build software. Companies like Ramp, Amazon, and Google have been using AI for years to provide recommendations, extract insights, and combat fraud. Since our founding, we’ve built AI tools to save customers over $400 million to date.

However, most AI solutions today are superficial chat experiences that lack real customer value. We think these are prime examples of "AI washing"—using AI for the hype rather than the impact.

At Ramp, we follow a few principles to ensure our models consistently deliver meaningful outcomes—and more importantly, save customers time and money.

Focus on outcomes, not interfaces

Many AI implementations are a thin chat layer on top of a product that customers have to go out of their way to use. But AI should be more than a flashy chatbot interface—it should be embedded in your workflows to actually get things done.

For example, when you’re managing contracts on Ramp, you don’t want to ask a chatbot question after question—you want the key details extracted and analyzed for you. Having AI pull out and highlight the most important terms from an automatically imported contract is far more useful than a chatbot that requires manually uploading documents and posing individual, one-off questions. AI should work for people, not the other way around.

Separate general knowledge and private data

Large language models are good at common-sense reasoning because they have been trained on huge datasets to learn relationships between concepts. However, for a fintech platform like Ramp, models also need to understand concepts and sensitive customer data not found in these general datasets. Unlike companies that train models on private data end-to-end, we safeguard data by splitting models into two categories:

- General models trained on aggregated and masked customer data to handle common tasks. These shared models learn patterns across customers by understanding the structure of the data rather than the actual contents.

- Sensitive models that can temporarily use private customer data without storing it, using a technique called in-context learning.

For example, our contract pricing benchmarks use a shared model to extract line items and terms from vendor documents. We use a general model for this purpose to ensure that it performs well across a wide variety of contracts. If customers opt in to share pricing data derived from their contracts, we then use in-context learning to benchmark their pricing against pricing data shared by similar customers.

By separating our models into general and private domains, we enable powerful insights while upholding data privacy. Customers then have the choice to share their contract data to improve Ramp’s models, but only if they explicitly opt in.

This framework ensures that no customer's contract information is used without their permission. It allows us to build models that understand both general knowledge and customer specifics, without risking accidental exposure of private data.

Explainability isn’t enough—offer users control

The best AI is useless without trust from users. Explainability, which attempts to trace how models make their decisions, seeks to build trust. But explanations aren’t always helpful or relevant—it’s more important that models improve and are responsive to user feedback.

For example, if Ramp’s spend intelligence model incorrectly codes a purchase to the wrong accounting category, a lengthy explanation of the model logic isn’t particularly useful. We simply allow customers to provide feedback so the model learns for the future. Focusing on control and continuous improvement is more meaningful than attempting to explain every AI decision.

Build guardrails, not censorship

As AI systems gain wider adoption, the risks posed by inappropriate, nonsensical, or offensive outputs also increase. Rather than censoring AI model outputs, we design models to make unwanted outputs impossible in the first place. In contrast, models like ChatGPT take natural language inputs and apply censorship filters to the outputs, blocking offensive or nonsensical responses. The problem is that censorship can be incomplete—it's difficult for filters to anticipate every possible unwanted output, especially as language models become more advanced.

Ramp’s Copilot AI is great at processing natural language to generate search queries from customer data. But instead of having the model directly respond using plain text (which could contain unwanted or harmful content), we show the results of those queries using predefined blocks of interactive, structured data. This allows Copilot to interpret the nuances of natural language while ensuring safe, controlled outputs.

This approach to "guardrails, not censorship" allows us to leverage powerful language models with confidence that we can build and maintain safe constraints on their operation. For example, we use techniques like Jsonformer (pioneered at Ramp) to convert unstructured text into formats that can be safely interpreted and used. Overall, designing safety from the start leads to more robust and trustworthy AI than retroactively censoring model outputs.

Creating better AI

AI will keep advancing, but it must be grounded in customer needs to provide real value. For Ramp, we’re focused on developing AI that gets work done, uses data responsibly, builds trust through improvement, and is designed safely from the start. By following these principles, we'll keep building AI that serves our customers rather than hype.

If you like our approach to building products, we’d love to connect. Check out our careers page.

“In the public sector, every hour and every dollar belongs to the taxpayer. We can't afford to waste either. Ramp ensures we don't.”

Carly Ching

Finance Specialist, City of Ketchum

“Ramp gives us one structured intake, one set of guardrails, and clean data end‑to‑end— that’s how we save 20 hours/month and buy back days at close.”

David Eckstein

CFO, Vanta

“Ramp is the only vendor that can service all of our employees across the globe in one unified system. They handle multiple currencies seamlessly, integrate with all of our accounting systems, and thanks to their customizable card and policy controls, we're compliant worldwide. ”

Brandon Zell

Chief Accounting Officer, Notion

“When our teams need something, they usually need it right away. The more time we can save doing all those tedious tasks, the more time we can dedicate to supporting our student-athletes.”

Sarah Harris

Secretary, The University of Tennessee Athletics Foundation, Inc.

“Ramp had everything we were looking for, and even things we weren't looking for. The policy aspects, that's something I never even dreamed of that a purchasing card program could handle.”

Doug Volesky

Director of Finance, City of Mount Vernon

“Switching from Brex to Ramp wasn't just a platform swap—it was a strategic upgrade that aligned with our mission to be agile, efficient, and financially savvy.”

Lily Liu

CEO, Piñata

“With Ramp, everything lives in one place. You can click into a vendor and see every transaction, invoice, and contract. That didn't exist in Zip. It's made approvals much faster because decision-makers aren't chasing down information—they have it all at their fingertips.”

Ryan Williams

Manager, Contract and Vendor Management, Advisor360°

“The ability to create flexible parameters, such as allowing bookings up to 25% above market rate, has been really good for us. Plus, having all the information within the same platform is really valuable.”

Caroline Hill

Assistant Controller, Sana Benefits